Synchronous mirroring is a feature where in data is mirrored between two storage systems (nodes) over a network. The network connectivity between the two systems is usually a private network to minimize interference with other data traffic.

When compared with asynchronous mirroring and delayed replication, synchronous mirroring has higher write latency since a write received by one system is considered complete only when the data corresponding to the write operation has completed on both the nodes.

However in the event of a node failure, clients can still continue as the data on the other node is a mirror image of the failed node.

Open systems such as Linux, FreeBSD have many implementations for synchronous mirroring. For example DRBD for Linux, HAST for FreeBSD etc.

However all implementations have to address (have addressed to some extent), the biggest issue with Synchronous Mirroring, namely Write Latency.Write latency is unavoidable as a write operation has to be acknowledged by both the nodes as completed.

When we wanted to implement synchronous mirroring in our product the first and most obvious idea is to use DRBD for our Linux implementation and HAST for our FreeBSD implementation.

The complexity of using two different implementations aside, these implementations did not suit our design approach.

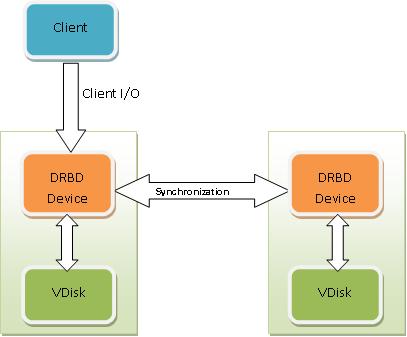

The first issue which we would face is specifying a VDisk as a DRBD device. Usually in a DRBD configuration, mirroring is between two DRBD devices. Each DRBD device will correspond to an underlying physical/logical disk (LVM, RAID LUN etc). Rather than writing to the physical disk, writes are sent to the DRBD device. It is now the job of the DRBD device to synchronize the writes to its corresponding device and to the peer DRBD device on the other node

In QUADStor implementation this can be achieved by specifying a VDisk as the underlying physical disk for a DRBD device as illustrated in the figure below.

However this will only work if the DRBD device is accessed by locally by the system.

For example:

Write Command -> DRBD Device -> VDisk

In the above scenario the DRBD Device will synchronize data to it DRBD peer on the second node which also has VDisk as its underlying physical disk.

The above is possible since the DRBD device sees the VDisk as a locally accessible /dev/sd[x] on Linux and /dev/quadstor/<VDisk Name> on FreeBSD

However writes received by the VDisk iSCSI and FC interface are not routed through the DRBD device. In fact they are not even seen by the /dev/sd[x] or /dev/quadstor/<VDisk Name>

One possibility is to run another iSCSI target/FC implementation on top of the DRBD device. So the write operation would now be

Write Command -> FC/iSCSI interface -> DRBD Device -> VDisk

However such an implementation has other issues. For example VAAI related commands such as XCOPY will be lost between the FC/iSCSI interface and the DRBD Device. The same applies to other SCSI commands such as SPC-3 Persistent Reservation commands.

Data Deduplication

The biggest factor in not choosing an external mirroring implementation is data deduplication (data dedupe). For example consider the same write operation

Write Command -> DRBD Device -> VDisk

The computation of fingerprint (hash computation) has to be done on both the nodes.

Let us suppose the write operation is of size 1MB and the entire 1MB can be deduplicated by both the VDisks. However the DRBD device has no knowledge of the underlying VDisk deduplication capabilities and will send the entire 1MB across to the peer DRBD device.

QUADStor Implementation

With QUADStor implementation two VDisks on two different nodes are tightly integrated for synchronous mirroring

- Hash computation for the purpose of deduplication are computed only on the node which receives a write operation

- Data which can be deduplicated on the peer node is never sent over the network

- SCSI command interpretation such as XCOPY, SCSI Persistent Reservation is never lost between the nodes

With optimizations as mentioned above QUADStor implementation is able to lower the network bandwidth requirements between the node, deduplication helps reduce the number of disk I/O operations on both the nodes and reduce the write latency for write operations

High Availability

With synchronous mirroring at any point both VDisks contain the same data. Data received by a VDisk is synced to its mirror peer and only when both the VDisks complete the write operation, the status for the write operation is sent back to the client

When one of the nodes goes down, the other VDisk can still service client write/read requests.

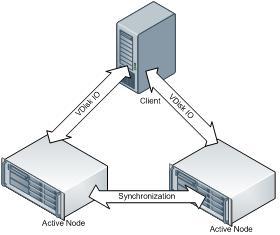

Active-Active Clustering

VDisks in a mirroring setup can be accessed simultaneously from both nodes. From a client’s perspective it is unaware that the two VDisks are separate entities and it believes that the two VDisks are multiple paths to the same disk. In order to facilitate active-active IO we provide support for two set of SCSI commands

COMPARE AND WRITE (ATOMIC TEST SET / ATS)

The SCSI COMPARE AND WRITE command is used by clustering aware applications for resource locking, for example ESXi. Irrespective of which VDisk mirror receives the command if the command is successful, the COMPARE AND WRITE operation was successful on both the VDisks

SCSI Persistent Reservations

SCSI Persistent Reservation commands are used by most cluster aware applications for resource locking. In the case of persistent reservations, registration and reservation commands received by a peer are executed by both VDisks and the response sent back to the client by the VDisk which originally received the command.. From a client perspective since it sees the two VDisks as multiple paths to the same disk, it needs to register its reservation keys on both the paths(VDisks)

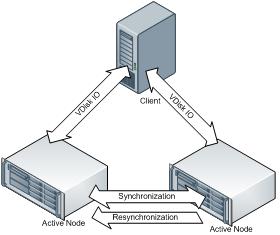

Handling Node Failures

When a node fails or goes offline, its VDisks are longer accessible by a client. At this point however, the peer VDisks can still handle client IO requests. When the node is back online, the data in its VDisks are now out of the sync with their respective peer mirror VDisks. The data from the active VDisks are synced back to the now online peer VDisks. It should be noted that resynchronization might take a long time depending on the amount of data that needs to be resynced. Only changed data blocks are however resynced. Also both peer VDisks are still accessible during the resynchronization operation.

The following figures illustrate a client accessing VDisks simultaneously from both nodes, a node failure during which the client continues writing to VDisks in the active node and the process of resynchronization when the failed node is back online

Node Fencing

Node fencing is required to avoid split-brain scenarios. This is achieved by stonith (SLES, FreeBSD, Debian) and fence-agent (RHEL) packages. Mirroring configuration allows for specifying a fence command which is called when a VDisk needs to fence its peer.