With QUADStor synchronous mirroring the following High Availability (HA) scenarios are possible

- Active-Active (Dual Primary)

- Active-Passive (Single Primary)

- Active-Passive with Manual Failover

In this tutorial we describe a step-by-step procedure for achieving HA with QUADStor VDisks

What is Synchronous Mirroring

Synchronous mirroring is a block level replication in real time. Data written to a block on a VDisk is mirrored/replicated to a peer VDisk on another node at the same time. It is left to QUADStor to handle conditions such as failure to write to the peer node, the peer node failure etc.

How is HA achieved ?

Since VDisks on two node contain the same data, even if one of the VDisks node fails, the other node can still read/write from/to a VDisk

HA from a client's perspective

To a client both VDisks present the same vendor identification, serial number etc. The client sees both VDisks as paths to the same disk and is unaware that the VDisk reside on two different systems. When the client is unable to read/write on one path, it continues on the other path.

Now the client can access the paths in a failover (active-passive) configuration, round-robin (active-active) configuration etc.

In a cluster configuration, more than one client can access the VDisk simultaneous. Usually such a configuration requires cluster aware application, file systems. In order to aid such applications, serialized access to the VDisks is maintained by support for SCSI Persistent Reservations (GFS2, OCFS2 etc) and SCSI Compare and Write command (ESXi)

Deployment Scenario

In order to describe the HA capabilities of QUADStor we have installed three virtual machines vm10, vm11 and vm12 with CentOS 6.2 as described below

vm10 and vm11 are installed with QUADStor Version 3.0.29 software

vm12 acts as the initiator

The following are the interfaces configured

On vm10:

/sbin/ifconfig

eth0 Link encap:Ethernet HWaddr 52:54:00:23:CE:2C

inet addr:10.0.13.100 Bcast:10.0.13.255 Mask:255.255.255.0

eth1 Link encap:Ethernet HWaddr 52:54:00:03:BC:A8

inet addr:10.0.13.150 Bcast:10.0.13.255 Mask:255.255.255.0On vm11:

/sbin/ifconfig

eth0 Link encap:Ethernet HWaddr 52:54:00:61:5C:1B

inet addr:10.0.13.101 Bcast:10.0.13.255 Mask:255.255.255.0

eth1 Link encap:Ethernet HWaddr 52:54:00:56:E2:65

inet addr:10.0.13.151 Bcast:10.0.13.255 Mask:255.255.255.0For our setup we will use 10.0.13.100 and 10.0.13.101 as the interfaces accessed by the client vm12. 10.0.13.150 and 10.0.13.151 will be used as as a private network between vm10 and vm11 for mirroring data

On vm10:

/sbin/route add 10.0.13.151 gw 10.0.13.150 dev eth1 /sbin/route Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 10.0.13.151 10.0.13.150 255.255.255.255 UGH 0 0 0 eth1

On vm11:

/sbin/route add 10.0.13.150 gw 10.0.13.151 dev eth1 /sbin/route Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 10.0.13.150 10.0.13.151 255.255.255.255 UGH 0 0 0 eth1

We now configure QUADStor to use 10.0.13.150 and 10.0.13.151 as the mirroring addresses

On vm10 the following is added to /quadstor/etc/ndrecv.conf

RecvAddr=10.0.13.150

On vm11 the following is added to /quadstor/etc/ndrecv.conf

RecvAddr=10.0.13.151

By the above steps we have configured vm10 and vm11 to use the network 10.0.13.150 <-> 10.0.13.151 for VDisk mirroring traffic

Configuring Fencing

Since vm10 and vm11 are two virtual machines using QEMU/KVM virtualization, we configure fence_kvm. Useful links for configuring fence_kvm are http://clusterlabs.org/wiki/Guest_Fencing and http://www.daemonzone.net/e/3/

We skip any clustering related configuration. As long as fence_xvm can fence the peer node it is sufficient for us.

Testing fencing

On vm10 to fence vm11 we run the command

/usr/sbin/fence_xvm -H vm11

On vm11 to fence vm10 we run the command

/usr/sbin/fence_xvm -H vm10

QUADStor Service is now started on vm10 and vm11.

To know if the RecvAddr is correctly configured we use the ndconfig command

On vm10:

/quadstor/bin/ndconfig

Node Type: Mirror Recv

Recv Address: 10.0.13.150

Node Status: Recv InitedOn vm11:

/quadstor/bin/ndconfig

Node Type: Mirror Recv

Recv Address: 10.0.13.151

Node Status: Recv InitedWe now configure QUADStor on vm10 and vm11 for fencing

On vm10:

/quadstor/bin/qmirrorcheck -a -t fence -r 10.0.13.151 -v '/usr/sbin/fence_xvm -H vm11'

The above command specifies that in order to fence vm11 we need to run the following command

/usr/sbin/fence_xvm -H vm11

Note that we have specified the 10.0.13.151 which is the address vm11 is using for mirroring traffic.

/quadstor/bin/qmirrorcheck -l Mirror Ipaddr Type Value 10.0.13.151 fence /usr/sbin/fence_xvm -H vm11

On vm11:

/quadstor/bin/qmirrorcheck -a -t fence -r 10.0.13.150 -v '/usr/sbin/fence_xvm -H vm10' /quadstor/bin/qmirrorcheck -l Mirror Ipaddr Type Value 10.0.13.150 fence /usr/sbin/fence_xvm -H vm10

Configuring Storage

1. We configure Storage Pools as described in http://www.quadstor.com/storage-pools.html

For this example we are using a pool named 'HAPOOL'. This pool is created on both vm10 and vm11

2. On vm10 and vm11 we now configure physical storage as described in http://www.quadstor.com/support/64-configuring-disk-storage.html

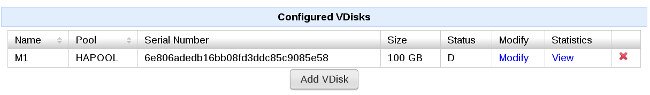

3. We then create a new VDisk M1 on vm10 as show in the figure below

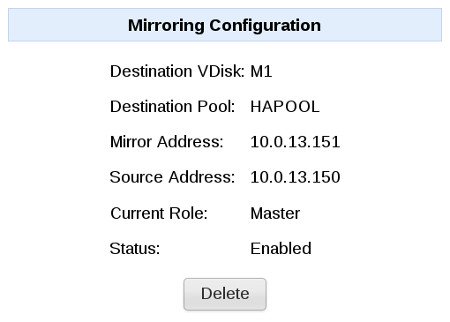

4. We then click on "Modify" and then under the mirroring configuration specify the address of vm11 as shown in the figure below

5. Then we Submit and we are done as shown in the figure below

To know the current roles of a VDisk we run qsync

On vm10:

/quadstor/bin/qsync -l Local Remote Pool Dest Addr Role Status M1 M1 HAPOOL 10.0.13.151 Master Enabled

On vm11:

/quadstor/bin/qsync -l Local Remote Pool Dest Addr Role Status M1 M1 HAPOOL 10.0.13.150 Slave Enabled

At this point M1 on vm10 is configured to mirror to M1 on vm11. M1 on vm10 acts as the master while M1 on vm11 acts as a slave.

From a performance, speed, or users point of view there is no difference between a VDisk in a master role or a VDisk in a slave role. However there is one significant difference, in the absence of the peer a VDisk can only perform writes in a Master role. This is to ensure data integrity in the loss of peer. In the case of a probable peer failure, the online node ensures that the peer is really offline by fencing the node. On a successful fence, if a VDisk is in slave role it would assume a master role. One a fence failure a master node will assume the role of a slave. When a lost peer is back online new writes to the Master VDisk is synced back to the slave VDisk.

SNMP Trap Notification

Before we get to the client configuration, we also configure vm10 and vm11 to a snmptrapd on 10.0.13.33.

On vm10:

We add the following line to /quadstor/etc/quadstor.conf

TrapAddr=10.0.13.33

On vm11:

We add the following line to /quadstor/etc/quadstor.conf

TrapAddr=10.0.13.33

On 10.0.13.33 where we have snmptrapd running we copied QUADSTOR-REG.mib and QUADSTOR.mib (available from downloads) to /home/quadstor/mibs

sh# export MIBS="ALL" sh#export MIBDIR=/home/quadstor/mibs sh#snmptrapd -Lf /tmp/test.log sh#tail -f /tmp/test.log NET-SNMP version 5.7.2

Client configuration

We now configure a linux client vm12 to access M1 on vm10 and vm11 simultaneously.

Below is our /etc/multipath.conf configuration for QUADStor VDisks

devices {

device {

vendor "QUADSTOR"

product "VDISK"

getuid_callout "/lib/udev/scsi_id --whitelisted --device=/dev/%n"

rr_min_io 100

rr_weight priorities

path_grouping_policy multibus

}

}vm12 accesses M1 on vm10 and vm11 using the iscsi protocol

We use the below script to login

list=`/sbin/iscsiadm -m discovery -p 10.0.13.100 -t st | grep "10.0.13.100" | cut -f 2 -d' '`

for i in $list; do

/sbin/iscsiadm -m node -T $i -p 10.0.13.100 -l

done

list=`/sbin/iscsiadm -m discovery -p 10.0.13.101 -t st | grep "10.0.13.101" | cut -f 2 -d' '`

for i in $list; do

/sbin/iscsiadm -m node -T $i -p 10.0.13.101 -l

doneAnd run the below script when we want to logout

list=`/sbin/iscsiadm -m discovery -p 10.0.13.100 -t st | cut -f 2 -d' '`

for i in $list; do

/sbin/iscsiadm -m node -T $i -u

/sbin/iscsiadm -m node -T $i -o delete

done

list=`/sbin/iscsiadm -m discovery -p 10.0.13.101 -t st | cut -f 2 -d' '`

for i in $list; do

/sbin/iscsiadm -m node -T $i -u

/sbin/iscsiadm -m node -T $i -o delete

doneRunning the login script we get

Logging in to [iface: default, target: iqn.2006-06.com.quadstor.vdisk.M1, portal: 10.0.13.100,3260] (multiple) Login to [iface: default, target: iqn.2006-06.com.quadstor.vdisk.M1, portal: 10.0.13.100,3260] successful. Logging in to [iface: default, target: iqn.2006-06.com.quadstor.vdisk.M1, portal: 10.0.13.101,3260] (multiple) Login to [iface: default, target: iqn.2006-06.com.quadstor.vdisk.M1, portal: 10.0.13.101,3260] successful.

After login we check the multipath configuration

On vm12:

multipath -ll

mpathba (36ef899ba04190ade706b96ec16c2ed0b) dm-0 QUADSTOR,VDISK size=100G features='0' hwhandler='0' wp=rw `-+- policy='round-robin 0' prio=1 status=active |- 13:0:0:0 sdg 8:96 active ready running `- 14:0:0:0 sdh 8:112 active ready running

Now we configure LVM using the following series of commands although we can use /dev/mapper/mpathba directly without the need for LVM.

sh# pvcreate /dev/mapper/mpathba Writing physical volume data to disk "/dev/mapper/mpathba" Physical volume "/dev/mapper/mpathba" successfully created sh# vgcreate vgtest /dev/mapper/mpathba Volume group "vgtest" successfully created sh# lvcreate -L99G -n testvol vgtest Logical volume "testvol" created sh# /sbin/mkfs.ext4 /dev/mapper/vgtest-testvol mke2fs 1.41.12 (17-May-2010) Discarding device blocks: 2621440/25952256 ... ... Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done This filesystem will be automatically checked every 36 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override.

sh# mount /dev/mapper/vgtest-testvol /mnt/

We now use dd to write data to /mnt/.

sh# dd if=/dev/zero of=/mnt/1 bs=1M count=10000

During the write we force a panic on vm10

sh# echo c > /proc/sysrq-trigger

We now check for failure messages in /var/log/messages on vm11

Mar 17 23:32:54 vm11 kernel: WARN: tdisk_mirror_write_setup:1554 peer takeover, continuing with write command Mar 17 23:32:54 vm11 mdaemon: WARN: node_usr_notify:556 VDisk M1 switching over to master role

At this point M1 on vm11 takes over as master

On vm11:

#sh /quadstor/bin/qsync -l Local Remote Pool Dest Addr Role Status M1 M1 HAPOOL 10.0.13.150 Master Disabled

On 10.0.13.33 we received the following trap

2013-03-17 23:37:12 vm11 [UDP: [10.0.13.101]:38262->[10.0.13.33]:162]: DISMAN-EVENT-MIB::sysUpTimeInstance = Timeticks: (63838) 0:10:38.38 SNMPv2-MIB::snmpTrapOID.0 = OID: QUADSTOR-TRAPS-MIB::quadstorNotification QUADSTOR-TRAPS-MIB::notifyType = INTEGER: warning(2) QUADSTOR-TRAPS-MIB::notifyMessage = STRING: VDisk M1 switching over to master role

dd on vm12 completes successfully

sh# dd if=/dev/zero of=/mnt/1 bs=1M count=10000 10000+0 records in 10000+0 records out 10485760000 bytes (10 GB) copied, 174.169 s, 60.2 MB/s

mutipath shows one of the paths as faulty

sh# multipath -ll mpathba (36ef899ba04190ade706b96ec16c2ed0b) dm-0 QUADSTOR,VDISK size=100G features='0' hwhandler='0' wp=rw `-+- policy='round-robin 0' prio=1 status=active |- 13:0:0:0 sdg 8:96 active faulty running `- 14:0:0:0 sdh 8:112 active ready running

Now when vm10 is back online we check qsync status

On vm10:

sh# /quadstor/bin/qsync -l Local Remote Pool Dest Addr Role Status M1 M1 HAPOOL 10.0.13.151 Slave Resyncing

The 'Resyncing' status is for the data written to VDisk M1 on vm11 while vm10 was offline. After the resyncing has completed

On vm10:

sh# /quadstor/bin/qsync -l Local Remote Pool Dest Addr Role Status M1 M1 HAPOOL 10.0.13.151 Slave Enabled

On vm11:

sh# /quadstor/bin/qsync -l Local Remote Pool Dest Addr Role Status M1 M1 HAPOOL 10.0.13.150 Master Enabled

multipath on vm12 now shows both paths as active,ready

sh# multipath -ll mpathba (36ef899ba04190ade706b96ec16c2ed0b) dm-0 QUADSTOR,VDISK size=100G features='0' hwhandler='0' wp=rw `-+- policy='round-robin 0' prio=1 status=active |- 13:0:0:0 sdg 8:96 active ready running `- 14:0:0:0 sdh 8:112 active ready running